Dear readers,

ABC News announced on Friday that they are hiring me as their new “Editorial Director of Data Analytics” effective late June. This new role will let me focus on all of the favorite parts of my current job at The Economist — reporting on new public opinion polls, building aggregation models for the horse race and a wide range of political issues, and forecasting election outcomes for the US House, Senate, and presidency — as well as apply those skills to other areas of data journalism and gain experience in helping establish the overriding goals a newsroom.

Much of the precise day-to-day responsibilities are yet to be fully hammered out, partially due to an ongoing restructuring of the relationship between 538 and ABC News, but I know I’ll be working with the team at FiveThirtyEight to build interactive content around these (and other, non-political) models, help guide their data-driven coverage of politics and elections, and contribute to the 538 politics podcast and other ABC News products (cable and streaming shows, election-night coverage etc). I’m very excited about the future of data-first political journalism, and looking forward to working alongside the talented team of journalists at FiveThirtyEight and ABC!

Many thanks to everyone who has expressed support so far. It really means a lot to me. I am very excited to hit the ground running (and rebuilding) when I start next month.

In the meantime, two items of business. First, some initial thoughts on what t I hope will distinguish the next generation of models (political and otherwise) that we build at ABC News/538 — soon to be even more fleshed out and appear on their site. Second, some sad but appropriate news regarding the future of this newsletter.

I. Polling and the “data-generating process” (DGP)

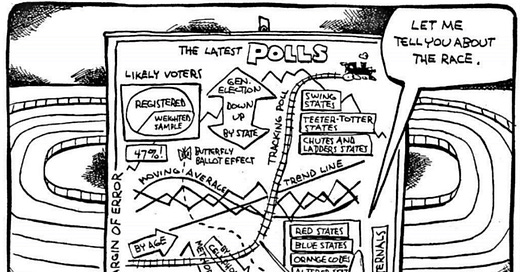

Here’s the big idea I want to contribute to poll aggregation and election forecasting in the next election cycle. As a student of political science and public opinion, I have long disagreed with most analysts over the amount of attention we should pay to how pollsters actually come up with the numbers they present us — what statisticians call the “data-generating process” (or DGP for short) for survey research. For example, throughout his tenure at 538 Nate Silver maintained that polls can be analyzed much like baseball players can, with individual observations weighted and adjusted based on their empirical historical record of accuracy alone.

But based on my education in public opinion, the hundreds of hours of research into election polls that I did for The Economist over the last five years, and my historical perspective from writing a whole book on polling, I believe that this is not enough: Polls should be adjusted based on their records of both accuracy and bias, and should be evaluated based on all the factors — quantitative and qualitative — that we can gather about each survey’s DGP. You can read about that here, here, here, here, here, or here.

Moving (away from) averages

That’s the big philosophical divergence in how I want to shape public discourse on the polls. What this means in practice is that the models ABC and FiveThirtyEight publish in the future will iterate, mechanically and theoretically, over the ones they published in the past. The big difference is that in a model where you want to capture information about individual surveys, a firm’s DGP, the level of non-response in the electorate or etc, the previously deployed methods (a “weighted average”” — or, really, kernel-weighted local polynomial regression) are not entirely up to the whole modeling task. That’s because averages and local regressions are models that can loosely track observations and have hyperparameters that can be tuned to predict something — say, an election outcome or the reading of a poll in a few months — but not explicitly to measure an underlying phenomenon given some amount of data, which is what we’re really doing when we are aggregating surveys—measures of public opinion. And while, yes, technically what we want to do is produce good predictions of elections, in statistics both the journey and the destination matter — and I think we can do a lot of cool work to improve the journey.

The gory details here are that I see the future of polling aggregation as moving away from ad-hoc machine-optimized weighting schemes and moving averages and towards the widespread adoption of a family of measurement-oriented Bayesian statistical techniques that include structural equation models, latent variable models, hidden Markov models, state-space models, and dynamic linear models, to name a few. These are models that can account for the various factors that influence our observations (the ways in which a pre-election poll is conducted, for example) which are only indirect measures of the underlying state we are interested in tracking (most often, support for candidates in elections — but also things like issue attitudes and candidate favorability ratings).

Think of the models as working in two steps: one step that models the impacts of (a) overall public opinion on a given day plus (b) certain poll-level variables (such as mode, population, survey firm, previous survey firm bias, weighting variables, level of methodological disclosure, etc) on the results of polls, and another step that allows that state of public opinion to move by some parameterized amount over time.

The very cool thing is that models like these can be expanded to model support for multiple candidates or attitudes across geographies simultaneously. And that is why they are in my mind the natural next step for poll aggregation. I’m excited about exploring them with the team at ABC News and FiveThirtyEight.

Measure and explain, then predict

Aside from statistical matters, there are also journalistic advantages to building a model of public opinion that puts measurement first and prediction second. First, it allows us to explore stories about all the components of polling directly with the model, rather than running parallel analyses that do not all work together. For example, we can ask our computer which firms are the most biased towards Republicans in a given election year. The model fits that parameter at the same time as it’s figuring out in which states public opinion is shifting the most, or which candidates share the largest parts of each others’ coalitions (aka, have the highest correlations in vote shares). And you can think of many other questions to answer: What is the probability of an electoral college tie? What is the chance that your single vote decided the election? If Joe Biden wins Michigan what is the chance he wins Pennsylvania and Wisconsin, and the election? Etc.

In short, it’s very powerful journalistically to have all the math happening in one program, rather than some Frankenstein amalgamation of models and scripts all contributing point estimates and standard errors that get reconciled later.

This modeling workflow also guides us to think about measuring and explaining voter behavior first and predicting it second, rather than the other way around. That can be very advantageous in the journalistic goals our models are helping us accomplish. For example, much of the criticism of polling averages and election forecasts in the 2022 cycle (to the extent some non-zero proportion of it was valid) stemmed from the fact that averages were ingesting polls of low quality that happened to be almost universally biased to the right. The prediction-first mindset would have encouraged a forecaster to think about the historical test cases for their model. They likely would think that so-called “house effects” would account for that bias, as they had in the past, and the best thing to do was stick to the model and run with it.

A measurement-first mindset, on the other hand, would have encouraged you to think about the way the data was generated and how that would impact the model’s predictions — and thus our inferences about the world. In this case, if all available polls are on average uniformly too favorable to the left or right, then a mean-reversion-based house effect adjustment would not compensate properly for that bias. Plus, a workflow that allowed you to adjust polls for their previous empirical biases, or exclude them altogether in testing the sensitivity of estimates to certain pollsters’ data, would have helped you think through the mechanisms and manifestation of new types of bias more clearly.

I also think this view would have helped us guard against some of the overconfidence about Joe Biden in the 2020 election. The election model I helped build at The Economist was arguably overconfident in his chances of avoiding tail outcomes (although its performance was the best in the industry by some metrics, such as accuracy of vote share predictions), but more importantly, the industry-wide focus on probabilities led us astray from focused communication with readers about what would have happened if there was a larger-than-2016 polling error that November — regardless of the “low” probability of that event.

Foxes and hedgehogs, and something in the middle

In Nate Silver’s essay announcing the leveling-up of FiveThirtyEight from a blog at the New York Times to a fully-fledged data journalism website housed at ESPN, he posited that journalism exists on a two-dimensional plane. Any given story lives along some axis running from quantitative and qualitative, and simultaneously on another axis running from anecdotal and ad-hoc to rigorous and empirical.

I think FiveThirtyEight is the best example in American political media of a website that exists solely to take a position as north and west as possible on that graph while still telling a complete story. But I also think, as a Bayesian would say, that we have ignored too much of the anecdotal qualitative data that we can include in our models of the world — both mental and formal — given that we have a framework to include both. As the saying goes, the plural of anecdote is data. (You may have heard the contrary maxim, but this is in fact a misquote.) And a Bayesian modeler should take in all the information they can and adjust their priors accordingly.

It was not quantitative, for instance, to assert in 2022 that since polls in competitive races were coming disproportionately from very right-leaning pollsters that you should put less weight on the averages. But exploring what the world would look like given that new information would have helped tell a more complete story about the midterms.

Another takeaway from Silver’s “What the Fox Knows” article is, as the title of the piece and logo of his site allude to, the lesson of Isiah Berlin's essay building on a phrase from the Greek poet Archilochus: “The fox knows many things, but the hedgehog knows one big thing.” His parable is of the natural contest between a predating fox and his hedgehog prey, where the fox deploys many tactics to capture his dinner. He sneaks, he runs, he jumps, he plays dead. But the hedgehog can do one big thing perfectly: roll into a spiky ball for the ultimate defense.

As the popularized lesson goes now, Berlin used the idea to divide writers and thinkers into two categories: hedgehogs, who view the world through the lens of a single theory or idea, and foxes, who draw on a variety of information and experiences when making inferences about the world. The implication from Silver is that data journalists ought to be foxes: guided by a wide range of data, experiences, and Bayesian reasoning when making forecasts, rather than hedgehogs, who always come back to the same set of ideas, grandiose theories, and archetyping that get them stuck in holes (or local minima), blinding them to the reality the fox can see.

My theory is that we are to be something in between the fox and hedgehog — or perhaps both at the same time. About his essay Berlin said he “meant it as a kind of enjoyable intellectual game, but it was taken seriously. Every classification throws light on something.” The lesson may be that we have taken the distinction between the two too far. It is a false choice to be either a fox or a hedgehog; good explanatory journalism can be both empirical and idea-driven.

A commitment to democracy, for example, is not necessarily Foxy, but it is the thread that ties together all of our journalism on politics. A piece on the parties today would not be clear-eyed without that idea running through it. And neither is it wholly Foxy to, when analyzing public opinion, commit ourselves to taking into account all the nuances of the processes generating the data we are aggregating. You might argue that this is incompatible with the view that the highest degree of predictive accuracy is the end goal of data journalism. For the sake of explanation and exploration, of visualization and conversation, I am willing to make that concession.

II. Programming note

That brings me back to this newsletter, where I think I have produced a good balance of predictive journalism and idealism about public opinion and democracy. And it has been very focusing mentally to write weekly(ish) dispatches to the nearly 7,000 of you for the last six years. (Although the comments section and back-and-forth with y’all never really evolved how I wanted it to.)

However, part of my job at ABC News will be to write a similar, election-forecast-oriented newsletter when the campaign season approaches. This blog directly clashes with that responsibility, and so the rules and standards team there has directed me only to post about personal side-projects and adjacent topics, and only occasionally, and only after review by my superiors.

That means that I won’t be sending weekly updates here anymore. Because I won’t be producing that value for readers, and to avoid any conflicts of interest, I will also be suspending all paid memberships to the newsletter. Current subscribers will receive prorated refunds for any remaining issues they purchased. While I will only send the occasional email now, usually with links to what I’m working on at ABC News/FiveThirtyEight, I will continue to regularly post Notes on the Notes side of the Substack platform (as social media posts are treated with a separate policy internally). And of course, I will keep posting on Twitter.

It is sad to lose this regular forum for conversation, but I am still very excited about what comes next. One hope I had when writing Strength in Numbers was that the discourse about pre-election polls would shift away from hyper-accuracy in election prediction and towards measurement uncertainty and explanation about politics. I have had a lot of ideas about visualizing forecasts and using interactive journalism to let readers play with parameters that I did not have the resources to pursue elsewhere. This new job gives me the chance, as well as the megaphone I will need, to accomplish those goals.

I hope y’all will stay along for the ride. The team at ABC News’s new, politics-focused version of FiveThirtyEight (if they continue calling it that) is doing some really cool stuff. I can’t wait to work with them, and to share that work with the world.

Until then,

Elliott