The backlash to strategic issue polling is not what it seems 📊 August 15, 2021

Objections to “survey liberalism” and “popularism” stem in part from a misunderstanding of how polls actually get used in politics

This week’s newsletter is long because I have a lot on my mind, and because I missed last week’s note and would like to make amends. Please check out the German election polling model that was taking up so much of my after-work hours that my Economist colleagues and I finally released this week. As usual, if you have thoughts, you can reply to this message or email me at elliott at gelliottmorris DOT COM.

There is an ongoing conversation among Democratic activists, strategists and (mostly left-leaning) newspaper columnists about so-called “survey liberalism” and “popularism” — terms referring to the usage of political polls to craft political strategy and, in particular, to deem some things unpopular and worth avoiding. This conversation is missing a good-faith reading of the history and accuracy of political polling, including how polls get used in journalism and government. As I have studied this, I would like to offer a remedy.

Ryan Cooper, a political commentator for The Week magazine, published an article two weeks ago titled “David Shor and the Democrats’ new cult of the popular”. In it, he derides Shor, a progressive data analyst, for supporting the idea (roughly speaking) that Democrats should do things that are popular and be quiet about things that aren’t. In Cooper’s view, this means “placating the racism of white voters, avoiding slogans like "defund the police," being cautious on immigration reform, heavily means-testing welfare programs, and so on — basically the suite of policies moderate Democrats already support — because that's what polls say most voters like.” He is skeptical this is a sensible strategy, for a few reasons.

(For now, set aside the rather glaring unanswered questions of whether this is actually what the polls say, or what most Democrats support.)

Primarily, it seems Cooper’s biggest objection stems from the noise in the polls. I am going to quote him at length because I think he gets most of this right, but is blowing some parts out of proportion and wrong only about a few key particulars:

The first and most obvious problem with survey liberalism is that polling is a highly imprecise business. Many, many studies done from decades ago to the present day have demonstrated that slightly changing the wording of a poll, or which questions are included, or the order in which the questions are asked, can significantly alter results.

In the modern age, there is a further problem that getting accurate samples has gotten harder and harder. A pollster obviously cannot speak to every person in the country, so they use a statistically representative sample (and/or weight their results to adjust for the demographic background). This was fairly easy 30 years ago, but today, most people no longer have landlines, and few people answer unknown cell phone numbers anymore thanks to a constant deluge of spam calls. Polling firms have repeatedly overhauled their methodology in an attempt to account for this, but their budgets are not unlimited, accuracy is a moving target, and their recent record is not great. National forecasts were fairly close in both the 2020 and 2016 elections, but several state polls were quite badly off in both years, and in the same direction — each time underestimating Trump's support in critical swing states.

Cooper is roughly correct in the first paragraph, but is making a big error of magnitude. It is not his fault; the press has routinely gotten this wrong. So let’s look at the facts: for presidential elections, the polls have overestimated Democratic candidates by about one and a half points in vote share nationally over the last two cycles, and by about three points in close states. That is a pretty big miss when you’re trying to predict an election where the outcome changes dramatically within half a percentage point, but I would not call the performance “highly imprecise”. After all, the range of outcomes is from 0 to 1, not 0.54 to 0.52. A “highly imprecise” method would be one with a margin of error of 10 or 20 points; akin to television pundits who opine on elections without first consulting the history of the data. But more to the point, no political actor is being persuaded to support a policy based solely on polls that show it has a 4 point margin among voters. That would be too risky! The case we’re concerned with is when a poll is 30 or 40 points wrong: and that is simply not something that occurs.

Another issue of proportionality is: while some studies have shown that question-wording can change whether a clear majority oppose or support a policy, that happens rarely, and the effects stem more often from the problem of non-attitudes — people responding to how researchers cue them about a policy they don’t know, rather than giving them ample chance to say they “don’t know”.

This is, I think, the better objection to “survey liberalism”. Cooper does mention this later, but I think it should come sooner. The logic goes that “regular people” don’t know about most issues, so we can’t ask about them, so we can’t craft stately on them. Again, that is fair enough — but it’s not exactly an accurate reading of how polling works in the real world. For one thing, check out how detailed the questions from Data for Progressive, a left-leaning but high-quality pollster, are:

They also provide an option for respondents to say they don’t know.

Now, sure, Data for Progress is only one pollster. Maybe others act worse? I’d submit that, on average, they do not. Go through the crosstabs for Monmouth and Quinnipiac’s recent polls on the infrastructure bill, for example, and they also provide both ample issue description and outs for respondents. Pollsters also address this issue inherently by asking questions that are of national significance; usually, polls are done on questions of the news of the day or on big, salient political debates. So while this is a theoretical limit of polling, it is not so large an issue in practice.

So, I wonder what this is really about? Is Cooper caught up in the backlash to “do popular stuff!” because he believes using polls to advance popular ideas is a strategy that comes with too large a margin for error? We don’t really have to wonder. He goes on:

In practice, scrupulousness is a rare commodity in politics. I don't doubt that the survey liberalism adherents are familiar with most if not all of the data I have cited so far. But as a famous repeat victim of Twitter hoaxes once wrote, "a man's at odds to know his mind cause his mind is aught he has to know it with." All the ways that polls might be slanted or biased raises the possibility of cynical abuse (just rigging the questions to get what you want), or self-deception, as Shor himself says was true of the Hillary Clinton campaign. No matter how sure you are in your own head that you are being Mr. Data Professional, everyone who thinks and writes about politics has strong political views and that will inevitably color how they treat polls — there will always be a temptation to seize on (or commission) convenient polls, or ignore inconvenient ones. (I of course am guilty of this myself.)

When Shor asserts that "the more you means-test a program the more popular it gets" — absurdly implying that the pandemic stimulus checks, which registered support from about three-quarters of Americans in multiple polls, would be even more popular if they went to only the single poorest person in the entire country — he's revealing how a contrarian delight in needling the left can lead to (at a minimum) ridiculous exaggeration. When Yglesias argues that Matt Damon would be a good political candidate because he was reported to have only recently stopped using homophobic slurs in a country where same-sex marriage polls at 70 percent approval (though Damon later denied the story), we see a heated dislike of identity-centered "wokeness" overriding common sense.

You know what? I agree with Cooper here — to an extent. Some of this punditry he highlights is really bad. But it’s also divorced from the debate over majoritarian poll-pulism. I think we can reasonably assert that Cooper’s argument against “survey liberalism” is at least as much an attack on the ideologues than the ideology, if not more so. Does Cooper disagree, for example, with the progressives at Data for Progress parlaying their polls into a meeting on climate policy with Joe Biden’s political team during the 2020 election?

A related question: How much of the case that we shouldn’t do what (polls say) is popular rests on an objection to a few people using polls to advance a cause we don’t agree with? Fear of what bad people might do with the power? This is an old objection to using survey research as a political instrument; in his 1944 book A Guide to Public Opinion Polls, he raises this very point, and it is one of Lindsay Rogers’ chief criticism of the polls in his well-timed 1948 book The Pollsters. How can we unleash this very powerful tool on our democracy, when there are people who will use it for bad? To, in the relevant case, say that a popular redistributive policy shouldn’t be passed until a means-testing requirement is added to it (if that is indeed what Shor said)? Or to just avoid the slogan “defund the policy” because most people reject it?

A natural retort is that there are bad actors in any system~ People are going to abuse polls just like they abused the pre-(and post!)democratized party nominating system, or campaign finance law, or any other such institution. I guess I would rather the good guys have polls too than to not have the polls at all. Public opinion surveys are, more often than not, a corrective to the biases of our institutions that tend to push against the people. Putting my mathematical political theorist hat on: we are more concerned with moving the average outcome than making minor changes in the variance.

If we can sum up the objection to polling as “using polls in politics is good when people use them the right way” then I don’t think the backlash to “survey liberalism” really has two legs to stand on.

Continuing in Cooper’s article, there is a host of other good-sense objections to a political strategy that is 100% crafted using the polls. Policies can get more popular as people learn about them; the country can change its mind (ie on same-sex marriage); symbolic political strength is just as important as spatial issue-based alignment with the median voter. I would like to add one more double-barreled objection to an entirely poll-based political strategy: First, that many people do not have attitudes about even the most salient political issues — and second, since these people get their answers from their political elites, that the polls are thus magnifying the relative strength of collective party membership at any given time.

But this is a straw man! Political leaders are not making their decisions entirely based on the polls. It is farcical to think pollsters in Biden’s White House, for example, are convincing entirely him of every action to take, speech to make, or policy to support. Even John Kennedy, who hired one of the first groups of behavioral political scientists to tell him whether to support Civil Rights vocally in his 1960 primary campaign, ultimately could not divulge whether the polls made a difference in his calculation. The advice he was getting from them (to do so, perhaps emphatically) was the same advice he was getting from his other advisors. (I will say more on this history in my book; one of the reasons I wrote it is to help people understand the real extent of polls’ political power in DC. It’s less than you think!)

What this seems to be about is an objection to the rare excesses of poll-based politics, rather than the reality of it. It is worth saying, clearly, that polls are usually a force for good.

What follows in Cooper’s piece is more bashing of neoliberals that convinces me we’re not actually having a debate about polls and popularism, but about the policies that popularists are advocating for. I agree with a lot of what he says about the timidity of Democrats today, but this is all besides the point.

Here’s the closing paragraph:

Te bedrock political reality today is that the Democrats have had the consistent support of a solid majority of the country for well over a decade, while Republicans are plotting to exploit the wildly un-democratic features of our 18th-century Constitution to institute permanent minority rule. For instance, as Shor has (correctly) argued, the Supreme Court is a tyrannical, racist institution that should be radically disempowered. The same is true of the Senate. To ensure even a remotely fair election next year, much less put through reforms to these institutions, Democrats will need to muster guts and determination that are not currently in evidence.

I agree: the Senate is irredeemably biased against the majority, the Supreme Court is anti-democratic, and the Electoral College is a bonkers way to elect a president with all sorts of whites-maximizing biases and errors of institutional design. But imagine we didn’t have the polls to paint a portrait of America. How would our understanding of the average person’s policy preferences be different if we only ever observed the results of our bi/quadrennial elections? Or talked to Trump voters at diners? Or counted political lawn signs in our neighborhoods? What if what a majoritarian politician should do was determined by the electoral college instead of the actual will of the majority — observed through multiple readings by good pollsters with robust wording?

Again, I think the current world is the better one. The more polls, the better. After all, what Ryan Cooper seems to want — a political system that, on average, represents the true opinions of the numeral majority — is exactly what polls deliver.

. . .

In summary, I will readily conceded that sticking too close to the polls is a historically inadvisable position. Democracy by survey leaves us overexposed to errors in measurement and response that will simply always exist with political surveys. But critics have spun the wheel too far against them and advanced arguments that are equally misaligned with both the history and science of polling. They are also, evidently, strawmen. Very few politicians are truly driven by public opinion surveys.

But despite their missteps, polls have tended to be the most reliable general indicators on the mood of the country on multiple social and political issues we would not be able to measure otherwise, and on topics which elections don’t fully or regularly capture. The middle-ground between throwing them out and trusting them always is to fund polling outfits that constantly test and re-test their sampling methods, weighting algorithms, and question-wording; to average the data from all these trustworthy firms together in a system that lets us track sentiment over time; to use that data to hold politicians accountable when the public has clearly decided for or against their favour. So should we be arguing against polls, or against bad methods? We ought to ask how democracy be improved by better data, rather than argue for discarding it.

We also ought to be skeptical of people who think they know politics and the public better than the people who study them every day. The polls are, after all, just a way of talking to the people. By the very nature of information exposure, I do not believe newspaper columnists have the upper hand on this over the pollsters. At least on average.

I suspect the outspoken opponents of so-called “survey liberalism” — many of them Liberals and progressives themselves — would agree that the issue with America’s political order is that it is too far removed from correction by the people, rather than it being not distanced enough. I would urge them to think, not for the sake of converting them to “popularists”, but for the sake of amplifying the peoples’ voices in government, about what they can do to improve the very powerful tool the pollsters have given them. I imagine a country with an even more rigorous polling industry: one with more money, more data, and more sophisticated statistical tools to check how well they are reflecting the will of the people. Such a reality will come, in part, when newspaper columnists and politicians give their buy-in to such an eventuality.

There is a line stretching, one on end, from the manufacturing of consent to the discovery of it. On average, after studying the history of polling a great deal, I think it is undeniable that polls put us closer to the correct end of that spectrum. Despite their faults, they are a force for good. If only people could see them as such.

Posts for subscribers

August 14: A subscribers-only thread on the popularity of infrastructure spending, and on getting left behind

Assorted links

One more plug for the election forecasting model my Economist colleagues and I created to simulate uncertainty in polls of the upcoming Bundestagwahl (parliamentary elections) in Germany:

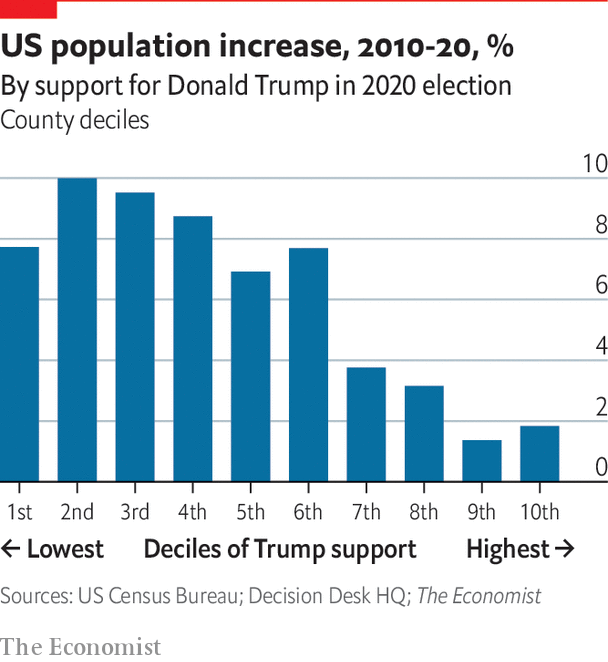

Here’s an article for me on the Census results, which shows blue America is growing and red America is shrinking. It includes a description of this chart:

Thanks for subscribing

That’s it for this week. Thanks so much for reading. If you have any feedback, please send it to me at this address — or respond directly to this email. I love to talk with readers and am very responsive to your messages.

If you want more content, you can sign up for subscribers-only posts below. I’ll send you one or two extra articles each week, and you get access to a weekly gated thread for subscribers. As a reminder, I have cheaper subscriptions for students. Please do your part and share this article online if you enjoyed it.

Question (& possibly a dumb one) reg. the recent census release: I've seen a number of takes on ET along the lines of ~ "the census release spells good news for democrats because democratic strongholds are growing; places that tend to vote republican are losing population." However, this is just an update of the population change that *has* happened, and with the election last year we have at least one data point showing how the updated population behaves electorally. I can see how that take is valid if these trends were to continue (/if current demographic coalitions continue to hold), but does it necessarily spell good news in the present/near future?

I'll be honest I haven't read the Economist article you mentioned so the answer may be "go read the article"

I've memorized my social security number because I know I'll have to recite it verbatim on numerous occasions. Politicians similarly memorize issue positions that they will regularly be called upon to voice. Most attitudes are not like that. We don't know exactly what we will need, if anything, when, or in what form. So we don't memorize our own attitudes but recreate and adjust them to specific situations where they are useful. There are a host of influences on these pronouncements, including recall of past announcements, a generic starting place for similar questions, the implicit bias of our language, and characteristics of the questioner. There is a similar diversity of influences on what politicians express as policy stances. If attitude describes a predisposition to consistently respond, then politicians have attitudes on policy; if attitude describes a more subjective feeling or thinking, it is less clear that the politicians stances are attitudes. The phrase "nonattitude" conjures a dichotomous choice between robotic regurgitations and random panic attacks. Neither is the situation for most of us or politicians. We are more or less.