Moving off the gold standard

Newer technologies in public opinion polling are now routinely beating "gold standard" methods

The traditional telephone public opinion poll, I’m excited to say, is increasingly looking like a thing of the past. To be clear, I am not cheering for the downfall of polls conducted by random-digit dialling (RDD) with a list of American adults; rather, I am celebrating the accuracy of newer, more technically sophisticated methods.

Public opinion research, it finally seems, has entered the 21st century.

I want to draw your attention to two analyses of the accuracy of polls during the 2020 election that have recently been published. The first is by Peter K. Enns and Jake Rothschild, political scientists at Cornell and Northwestern University. They aggregated 355 polls conducted between September 1st and November 1st 2020 and compared their error at predicting the actual election results, grouping them by whether the pollster used a “probability,” non-probability or mixed-mode survey. A good example of a probability poll is the ABC/Washington Post poll, which uses RDD to reach respondents, whereas YouGov’s online polls are considered non-probability because they aren’t designed using samples of the full population.1

Enns and Rothschild find that the group of polls conducted using mixed methods — such as combining an RDD sample with a supplement of online interviews, or a survey combining mailed solicitations with an online sample — and non-probability polls were much more accurate than the purely ”probability” polls than use live interviewers to conduct the poll, often called the “gold standard” of survey research. They write:

The figure below shows that among surveys of likely voters (LV), probability-sample polls produced an average raw error of 6.1%, while non-probability samples were, on average, 2.5 percentage points more accurate, with a mean raw error of 3.6%. Mixed samples were more accurate still, with an average raw error of just 1.3%.

We also analyzed absolute error — i.e., the average of the absolute values of each survey’s error — and mixed and non-probability samples again were more accurate, with absolute error rates of 3.1 and 3.7%, respectively, compared to 6.1% for probability-based surveys.

The authors also attach this graphic:

Further, they found that RDD polls were not significantly more accurate than other types of probability polling, including polls that are conducted online but recruited by randomly mailing postcards to people on the Postal Services master list of mail address (called address-based sampling, or ABS) and polls conducted off a voter file.

Editor’s Note: If you found this post informative, do me a huge favor and click the like button at the top of the page and the share button below. As a reminder: I’m fine with you forwarding these emails to a friend or family member, as long as you ask them to subscribe too!

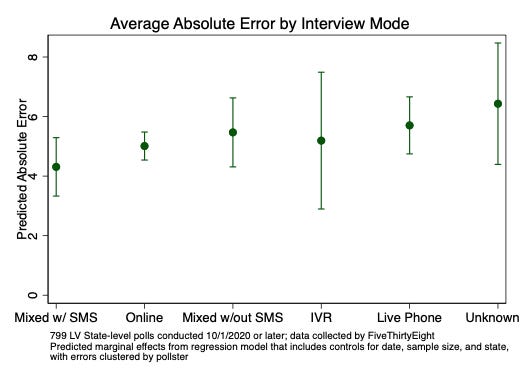

Having established that non-probability and mixed-method polls outperformed the “gold standard” RDD probability polls last year, let’s dive deeper into the methods of each group. Kevin Collins, the founder of a survey company that conducts polls both using live interviews over text message and by directing users to online surveys using SMS, wrote a blog post earlier this month that found that online and mixed-mode polls performed better than polls using live interviewers. Here is his graph of the average error by mode:

Importantly, the categorization of polls here would have included probability-based online surveys, like those conducted by the Pew Research Center and the Associated Press-NORC, in the bucket for online surveys. If you separate the non-probability surveys from that group, there’s a good chance they do even better, based on the findings from the Enns and Rothschild piece above.

The future of polling

Despite the poor performance of the polls last year, especially of the “gold standard” method, the future of polling looks bright. One analysis of 2014 election polls by Natalie Jackson, then blogging for HuffPost but not the research director at PRRI, suggested that phone polls may not be the reigning champions forever. The time for them to pass the baton has arrived.

The best polls last year were conducted using some of the most experimental approaches in the industry, including SMS text-to-web polls and mixes of ABS/online data. The non-probability opt-in online polls from firms such as YouGov also performed well, perhaps due to all the modeling work they put into getting representative samples and handling other selection-bias issues. Polls that blend traditional methods with more modern techniques of sample selection and correction are also getting really, really good. A decade ago, the industry was happy if new approaches simply met the accuracy of live-phone polls. Now, they’re beating them.

Pollsters are finally having broad success at taking the pulse of democracy online. Given all the problems with telephone polls, accuracy and cost chief among them, that is worth cheering about.

Neither are traditional phone polls, though! I have been putting “probability” in quotes because there is selection into the pool of responses for each survey mode, including RDD. Not everyone has a phone, and some groups are more likely than others to pick up.

New info for me. Thank you.

I know very little about the pipes of the internet so this question may be way off base, but would it be possible to use an RDD approach applied to IP addresses to generate a random sample assuming you can figure out a way to contact a household through their public IP? It seems like RDD is no longer working properly because of a change in behavior (more cell phones/less answering/etc) and not a change in the underlying reason why RDD worked so well in the past.