A revised method for measuring pollster quality, now published at 538

A few tips for reading 538's updated pollster ratings, and why I think they're so cool

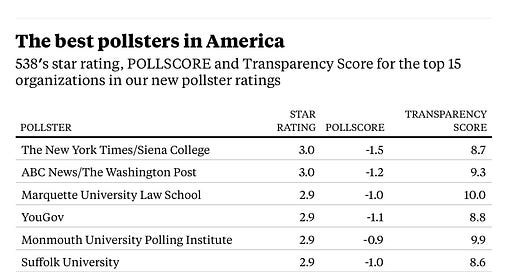

Today at 538 we unveiled our latest set of pollster ratings for the upcoming 2024 general election. This update includes grades for 540 polling organizations based on two key criteria: their empirical record of accuracy and methodological transparency.

Here are the products of all our work: The interactive dashboard for these new ratings is particularly cool. I’m also proud of the extremely detailed public methodology post we put out; If we’re saying that pollsters should show their work, it’s good that we do the same. And be sure to read the announcement article on the ABC News website, which explores the points below in greater detail.

For those wanting a shorter read on all this, here are the highlights:

Methodological Updates

For these new ratings, I developed a new methodology for 538 that ranks pollsters based on their empirical accuracy and methodological transparency. The methodology considers factors such as overall absolute error in past election polls, bias towards a particular party, performance relative to others in the same cycle, recency, sample size, time to the election, and the impact of herding.

1. Accuracy

The accuracy metric now penalizes pollsters showing routine bias towards one party, regardless of their absolute error performance. This means that pollsters who perform well when others perform poorly, without exhibiting a pattern of bias, receive extra credit. The updated accuracy metric also factors in poll recency, sample size, time to the election, and whether we believe a pollster is herding.

2. Methodological Transparency

One of the coolest things we do now is directly measure pollster methodological transparency. To do this, we developed 10 questions to ask about each poll that count for one transparency point each. Then 538’s researchers looked at over 8,000 poll reports to count up how many points a pollster got for that survey. A pollster’s overall score is a weighted average of its transparency across all of its polls, with higher weight for recent polls. This took a ton of effort to collect, so bonus props to 538’s research team.

POLLSCORE and Transparency Score

The combined metrics of accuracy (which we call POLLSCORE, a silly backronym for “Predictive Optimization of Latent skill Level in Surveys, Considering Overall Record, Empirically”) and transparency are used to generate the final ranks of pollsters we put on our dashboard. These rankings inform two dimensions of pollster quality: those who have performed well and shown their work, those who show their work but perform poorly, those who perform well but don't reveal their process, and bad pollsters.

The Star Grading System

The “ideal” pollster is one who has a long track record of empirical accuracy and who shares a plethora of information about their methods. With that in mind, we can use a mathematical model to measure how far each pollster is from ideal across our two dimensions. We convert the number from our algorithm into a star grading system, with three stars representing the ideal (or, in our case, Pareto Optimal) pollster and 0.5 starts representing the worst.

The current top pollster in America is the NYT/Siena poll — to no surprise. It’s an accurate poll that tells us a lot about how they generate their data!

Notes on Interpretation

Please keep two important notes on interpretation in mind. First, the rank ordering of pollsters should not be taken too seriously, as it is a little noisy and changes by a few positions whenever we add new data.

And second, keep in mind the scale of what we’re doing here. The best pollsters in our data do about 2-3 points better than the worst in predicting past elections. That is less than the margin of error for most surveys! As the 2024 general election approaches, we hope our ratings will be valuable tools for assessing the reliability of polling data. But if there is undetected bias lurking under all the polls, even the best can be off by a fair amount.

This seems like progress, but I think you're still missing the forest for the trees. These are not "pollster" ratings but "polling firm" ratings. Some very skilled pollsters and some extremely incompetent pollsters work at the same firm. (And by incompetent, I mean that they probably have trouble with basic math.) The only prerequisite for being a political pollster is the ability to land clients.

As the political industry has consolidated, and these firms grow in size, the polls are increasingly the output of a single pollster and not a unified work product. You'll see pollsters at the same firm try to poach clients from each other.

If you can leverage the transparency score to reveal who actually conducted the poll, and rate the individual(s) behind the poll, this would probably greatly improve both the model accuracy and the polling industry as a whole.

Nice! Is there any interpretation of the POLLSCORE rating?