Today was an interesting day to be a political data journalist. It’s not every day that the president advances some controversial theories in public opinion research by [*checks notes*] commissioning a report from his pollsters to abuse other data in order discredit prominent media pollsters. But let’s look beyond how he said it (go here for a quick brief) and consider what criticism the president levied.

In essence, the president’s consultants alleged that popular live-caller polls have too many Democrats in them, biasing numbers against the president. For the particular polls that he analyzed, that doesn’t seem true (at least not prima facie). Twitter responded by rightly calling him out.

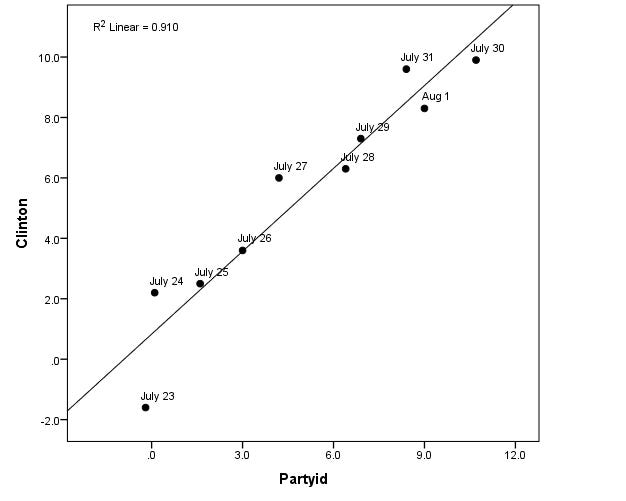

But the theory of this objection is certainly theoretically sound; if a poll did have too many Democrats in it, it probably would overestimate support for Democratic candidates. No surprise there. There’s even some evidence that this happens in practice:

So how could a firm get a poll that had an unbalanced partisan sample? One way is that, just by random chance, either the Democrats or Republicans they call would be less likely to answer the phone than members of the other party. That wouldn’t be a big problem if it occurred randomly for just one poll, but it’s also possible for this to happen across pollsters at certain junctures in the campaign. This is called “partisan nonresponse,” and despite objections from some analysts, it’s a very real phenomena.

Does this mean that every time the polls move against your party, you should go around claiming nonresponse is the culprit? Of course not. But at the very least, pollsters and journalists should explore the possibility when exploring their results. For example, just eye-balling the crosstabs from the most recent NBC/WSJ polls, I can see that the share of voters identifying as Republican has fallen±—and so has Trump’s margin. (This is a bit puzzling as NBC’s pollsters purport to control for partisanship, but if they do, we shouldn’t be seeing swings in the composition of their polls.) CNN’s data, on the other hand, isn’t weighted by party of past vote and show much more volatility. The share of Trump-supporting Democrats/Independents or Biden-supporting Republicans/Independents is freer to move around in their data than in other polls as a result.

I think there are some pretty clear benefits to controlling for partisan composition of polls. We shouldn’t “unskew” them by adjusting them to arbitrary baselines, of course, but if we can at least adjust for aggregate differences between pollsters that make the correction (sensibly!) and those that don’t, we can probably spot trends in nonresponse (or other sources of bias) before they mess up our estimates.