Polling and forecasting in 2024 and beyond | #215 - May 14, 2023

Pollsters are "worried about Trump" again. But the problems in public opinion surveys run much deeper

Dear readers, here is a brief note relevant to the nerds.

I did not attend the annual meeting of the American Association of Public Opinion Research (AAPOR), held last week in Philadelphia, this year due to some professional obligations that kept me in Washington. Luckily the conference had a lot of highly engaged Twitter users that posted lots of grainy photos of PowerPoint slides that gives us a good public look into how America’s pollsters — both public and private — are addressing some deep-rooted problems in opinion research.

Here is some reporting on the conference from POLITICO and the New York Times. Both articles are good and I recommend reading them. And here is one Tweet I find particularly consequential, and another I think is funny.

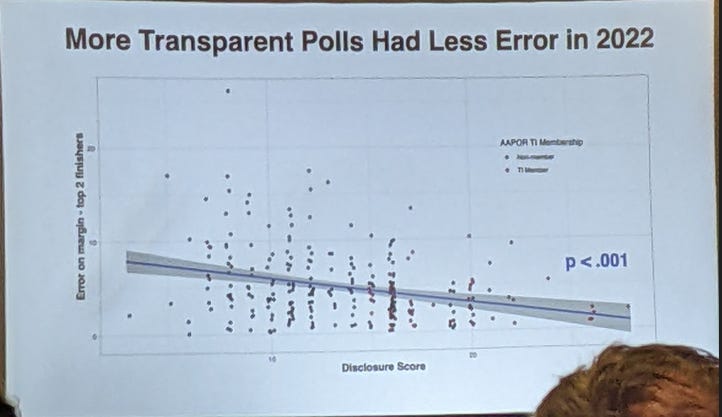

For my part, however, I want to draw attention to one single chart. Courtesy of a post by Ariel Edwards-Levy, CNN’s Editor of Polling and Analytics, of a presentation by Mark Blumenthal (who helped start the polling aggregation site Pollster.com around 2006/2007). It shows that pollsters who score higher on his measure of firm-level transparency — which he calls their “Disclosure Score” — had much less error in predicting election outcomes in 2022 than firms that were more opaque:

This matches a long-standing finding in election forecasting/polling analysis. Nate Silver, for example, found in constructing his popular pollster ratings that members of AAPOR’s Transparency Initiative (TI) have higher accuracy in predicting election results, leading to a sizable bonus for these firms’ ratings. To my surprise, I found a similar pattern in analyzing the bias and variance of pollsters’ pre-election vote share readings for The Economist’s election models last year. (This was a modest surprise because of the industry’s poor performance in 2016 and 2020.)

This is an important trend to highlight for two reasons. First, it affirms a strong prior belief that firms that hide their methods do in fact have something to hide. That includes some notable firms such as Trafalgar Group and Rasmussen Reports — which appear to be hiding methodological tricks that push their numbers profoundly to the political right — but also lower-name-recognition firms that are taking shortcuts to their results. That might include sourcing data from low-quality, low-cost online marketplaces for interviews; weighting to population targets that are outdated or incomplete; or even writing questions that clearly intended to extract a certain response from respondents.

The overperformance of AAPOR TI members and firms with high “Disclosure Scores” also suggests we should treat newer pollsters with skepticism. Maybe that new firm that promises it found a new way to account for partisan non-response, say, has not in fact stumbled upon the holy grail. It means that a random poll from a randomly selected established firm that revises its methodologies to meet new challenges of surveying is probably better than a random poll from some upstart company with a political agenda. And both of those are good things for political opinion polling!

But second, and along similar lines, the fact that we’re still seeing this pattern of TI-member overperformance also affirms a much longer view of the pollsters as an evolving industry that rises to the occasion even after disastrous defeat. List the years in which pollsters have faced a “catastrophic” error in election prediction or exit polling and you will find yourself with a nearly complete list of US presidential election years since the 1930s. There were big misses in all of 1936, 1948, 1952, 1964, 1980, 2000, 2004, 2012, 2016 and 2020. But the long-term trend in election polling is still towards methods that yield lower prediction errors over time. This is a thread I trace in my book, and I think it predicts what’s going to happen to the industry in the future.

As demonstrated by a surprising poll from Langer Research Associations/ABC News/Washington Post released last week, the old way of asking 1,000 random Americans a bunch of questions about politics — randomly calling them and seeing if they have the time — is prone to giving us some very wacky readings of public opinion. It’s also cost-prohibitive and takes a long time. But at AAPOR there were several panels devoted to new methodologies of weighting, sampling, predicting turnout &c that have recently bested these old tools. And there are some very sophisticated proposals that exist not squarely at one end of the spectrum running from a quality sample to a quality model of responses, but do both at the same, which are becoming a lot easier to use thanks to advances in computation and software. And that is what the future of polling looks like.

According to POLITICO’s reporting, many observers and even some pollsters think that Trump’s presence on the ballot is what has caused polls to go wrong in recent years. 2022 and 2018 were good years, they say. And maybe they are right. But in fact, Trump’s presence has not caused issues, it has merely exacerbated them. The big problem is the combination of low response rates and high demographic polarization. Pollsters subscribing to the Trump-driven theory of polling may choose to ignore those issues.

But the tools of the future will not paper over the challenges the industry faces today. Pollsters cannot simply cross their fingers and hope to get a representative sample (see: 2016, 2020). The firms that survive the next challenges of election prediction will be (1) methodologically transparent and (2) willing to innovate. To see this future for the industry you need only look to the past.

Talk to you next time,

Elliott

Feedback

That’s it for this week. Thanks very much for reading. If you have any feedback, you can reach me at gelliottmorris@substack.com, or just respond directly to this email if you’re reading it in your inbox.

No AI, please.